Nvidia-Blackwell: Next AI accelerator generation presented

Nvidia is the undisputed leader in the market for AI accelerators. The company wants to further extend this lead and is launching the next generation: the Blackwell platform.

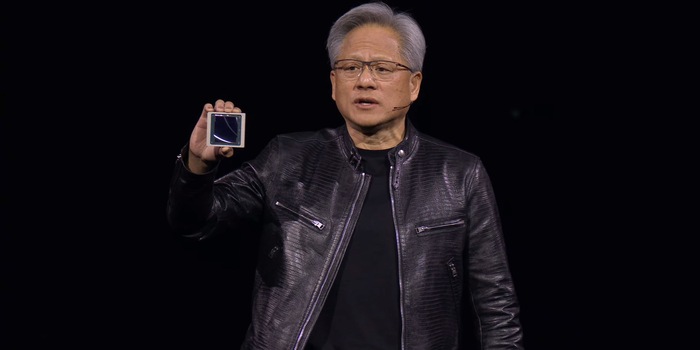

Nvidia CEO Jensen Huang presented the Blackwell platform at the company's own developer conference GTC in San Jose. It includes various products from chips such as the B100 to the DGX GB200 SuperPOD. These will be launched on the market in the course of the year. The new chips are said to be up to 25 times more energy efficient than their predecessors.

The Blackwell platform is based on the latest GPU architecture from Nvidia. Its name is also Blackwell, named after Dr David Harold Blackwell, an American mathematician. With the new products, the chip giant wants to build on the success of the Hopper chip GH100, which has made AI (artificial intelligence) a booming industry and Nvidia the third most valuable company in the world.

Speaking of value: Nvidia is not naming prices for the new chips. However, in view of the ongoing AI boom, the manufacturer is likely to have them gold-plated.

Little data on the chip

A new feature of Blackwell is that a GPU consists of two chips that are coupled together. They are connected via a 10 terabyte per second interface (5 terabytes per second per direction). The accelerator has a total of 208 billion transistors. This corresponds to an increase of 30 per cent compared to its predecessor. They are manufactured at TSMC in a process known as 4NP. This is a further development of 4-nanometre production. The structure width therefore remains the same as the previous generation.

Nvidia uses the fast HBM3e memory type, with eight 24-gigabyte stacks totalling 192 gigabytes. The memory achieves a transfer rate of 8 terabytes per second.

There was hardly any more information about the internal structure at the presentation. Overall, the BG200 accelerator is said to be 30 times faster and 25 times more efficient than the H100 with customised computing and data accuracy. Huang cites the training of the chatbot ChatGPT as a comparison. This was trained within three months with 8000 hopper chips and a power of 15 megawatts. With Blackwell, only 2,000 chips and 4 megawatts were required in the same time.

Deeper precision for greater efficiency

Blackwell's 4-bit floating point format (FP4) enables a format with only 16 states. This is less precise than higher numbered floating point formats, such as the FP16 format used by Hopper. According to the manufacturer, however, this is sufficient and allows the accelerators to handle considerably less data with only a slight loss of accuracy. In addition to the processing speed, this also doubles the possible model size.

Hopper already capitalised on the fact that transformers do not have to process all weights and parameters with high precision. The Transformer Engine, which is also used in the new chip generation, mixes the precise 16-bit floating point format (FP16) with the less precise 8-bit FP8. With Blackwell, Nvidia is now going one step further.

From big data to big brother, Cyborgs to Sci-Fi. All aspects of technology and society fascinate me.

From the latest iPhone to the return of 80s fashion. The editorial team will help you make sense of it all.

Show all